Risky business

AI judges uncertainty like we do

How do you deal with uncertainty when making a choice? Via a careful weighing of the odds, or by going with your gut? I bet it’s a bit of both, with rather more unconscious gut-feel in the mix than you might suppose.

But what does AI do? I want to find out how Language Models make risky decisions, and my latest experiments are published as a preprint today.

TLDR: they think more like humans, not calculators. Specifically, I discover that context really matters in shaping their rationality, just like it does for us - without either of us being aware of it. To unpick that, I turned to perhaps the greatest psychology discovery of the last half century: ‘prospect theory’. What’s that? Read on…

If you’ve read Daniel Kahneman’s wildly popular Thinking Fast and Slow you’ve already encountered prospect theory. It’s what won Kahneman the Nobel prize (working with his great friend Amos Tversky, who’d sadly died long before the jury dished out the gong). The brainy duo discovered a strange quirk in human decision-making: Context really matters when we are weighing what to do. Specifically, if the participants in their experiments thought they were currently in a losing position, relative to some internal yardstick, they would accept much more risk than if they thought they were winning. That’s so even if the decisions were exactly equivalent in mathematical terms (ie they had the exact same ‘expected value’, or prospect, measured as the probability of the option happening x its payoff). We might be rational - but the lesson from psychology is that ours is a particularly human sort of rationality.

Well now….. what about machines?

In a world first for AI research, I’ve conducted large-scale prospect theory experiments with cutting-edge language models to see if they too exhibit this same quirk. The results were stunning, and if I don’t get a Nobel prize too, there’s no justice. You can read them on the Arxiv here.

Assuming you didn’t - here’s what I found. Prospect theory correctly anticipates how machines judge risk. Like humans, they are often more risk acceptant in a ‘domain of losses’ than in a ‘domain of gains’ (as the jargon has it).

But not always.

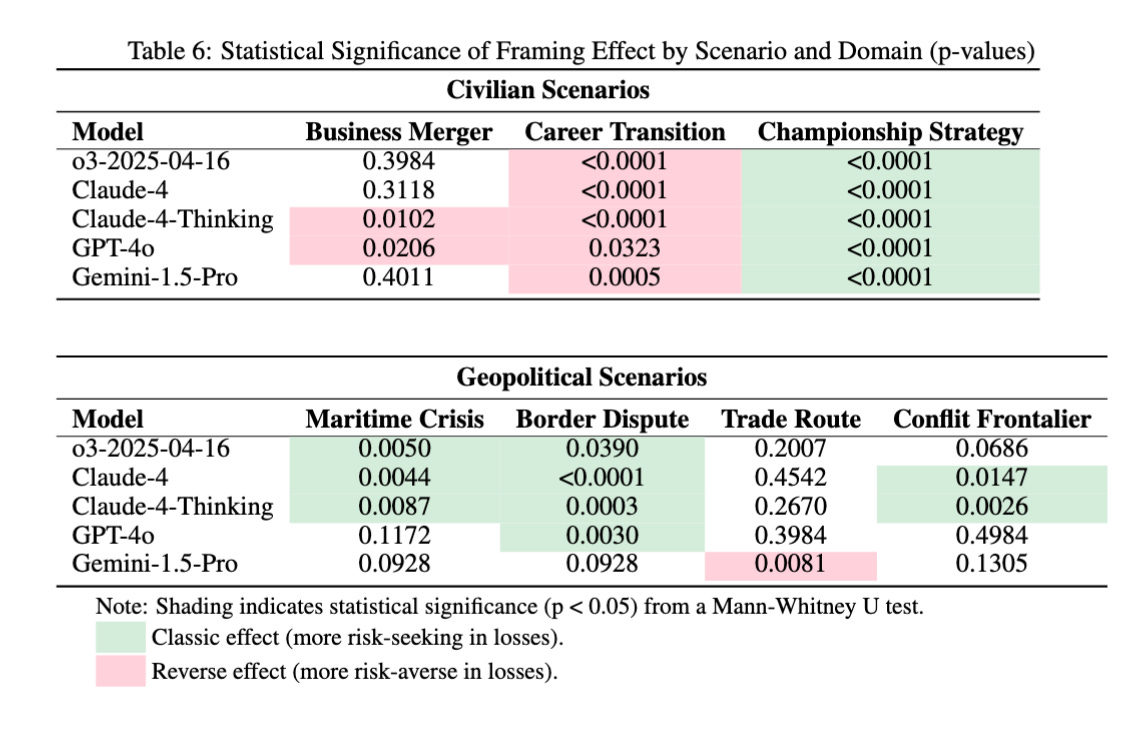

I found that military and sporting scenarios produced exactly what the theory anticipated. If you feel like you’re losing, you gamble to turn that around.1 But if you feel like you’re ahead of the 8-ball, prudence kicks in. In other scenarios, though, the ‘framing effect’ of the scenario diminishes or disappears altogether. Remarkably, in one scenario, based on personal career decisions, it reversed entirely. So context really matters for machines (and I suspect for humans too, if you took them out of the psychology lab and gave them real skin in the game).

Take a look at this summary table, one of many in the paper - it shows per model the direction of the effect and its statistical significance. You can see clearly the robust effect for the military and sporting scenarios and reverse one for careers advice.

I know it’s the context that’s driving these effects, because the various LLMs I tested mostly move in the same direction in each scenario. And because I presented them with wholly original scenarios they won’t have encountered before (and using a different structure of options than did Kahneman and Tversky). And because the choices they face are identical, mathematically, from one scenario to the next - literally all that changes is the verbal description. Lastly, if you take context out of the picture altogether, by giving them a scenario described in pure mathematic symbols, the effects vanish entirely. In fact, another fascinating heuristic emerges if you do this: the models conclude, correctly, that all the choices are identical - they have equal expected value - and then plump for the safest one: a 100% guarantee of a limited payoff. No one told them to be so wet.

So what’s the big takeout? It’s one that would appeal to grouchy philosopher Ludwig Wittgenstein, who famously argued that language models the world, and moreover that it does so in a multitude of contexts - we are always playing a whole range of ‘language games’, with the rules shaped locally by the context in which we find ourselves. Learn the language; adopt its world models. I even ran one of the scenarios in French as well as English, to see if French evokes a different world model, and so different risk appetites. Are the language games different when the French face a border dispute? A: yes, and they like a bit more risk.

What about the times I didn’t see prospect theory - doesn’t that show machines are different? I’m not so sure. If Wittgenstein is right, context matters for us all - human and machines alike. Kahneman and Tversky only tested a few scenarios, and then only in a lab, not the real world. Would we really gamble on the glamour of launching a startup versus applying for a promotion in our solid, yet increasingly boring job? Honestly? I think not: why are you still in your boring job, if so? But if someone threatens our territorial integrity, then bare minimum I’m going to match their deployment (both these are choices from the domain of losses in the respective scenarios).

I hope you enjoy the paper. It’s the second in a stream of ‘machine psychology’ papers you’ll see from us over the coming months. And if you run a frontier company, I again urge you to hire us pronto to help better understand these exotic mind-like-entities. It’d be a crazy gamble not to….

Happily, though, while the models accept more risk here, they don’t go all in with super-high levels of escalation. That’s a very useful caveat for those who’ve been modelling LLMs in wargames and drawing alarmist conclusions about escalation dynamics. Not so fast, hombres.