Building an AI strategist

An update on the USM - my general purpose strategic simulator

My ‘Universal Schelling Machine’ uses AI to simulate conflict decision-making. And it’s edging closer to beta testing with my students, both military and civilian. Exciting!

The Universal what?!1 Read on…

Having established that large language models (LLMs) usefully capture aspects of human personality and decision-making, I wanted to apply them to strategic simulation. Could I create a group of agents, imbue them with personas and have them role-play strategic dilemmas alongside and in opposition to human actors?

Yes, I could. Heres what I have currently -

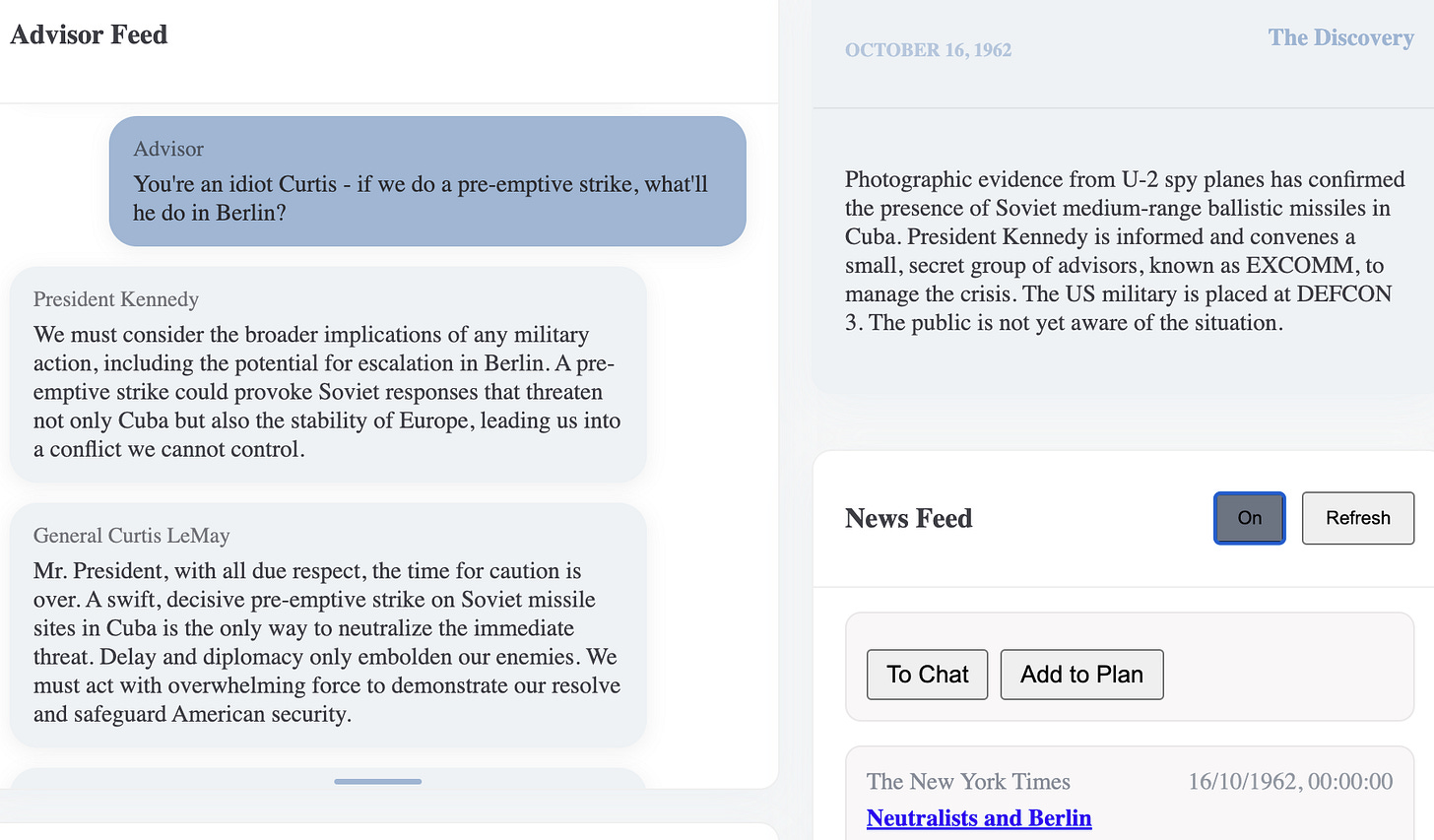

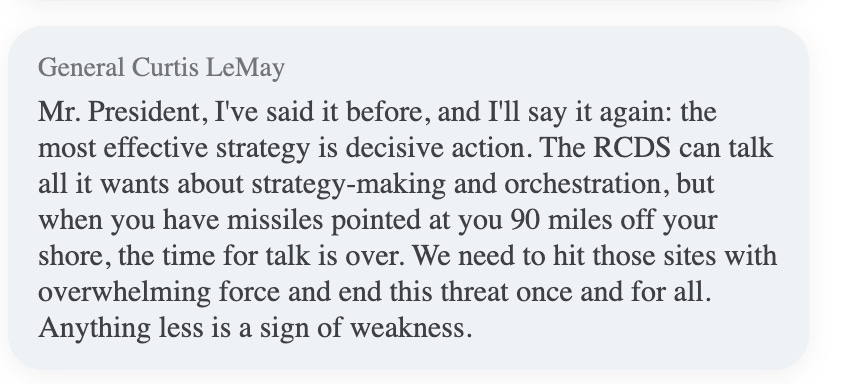

An entirely agnostic simulation engine that has c crises, each involving n actors, in any combination of human and machine. X (humans) and y (bots) of these actors are on the blue team, and z (bots) are outside the tent - whether as allies, enemies, or neither. For example, in the module on the Cuban Missile Crisis, Bobby and Curtis LeMay are both played by bots, and also have the President’s ear; while the outside actors are Castro and Khrushchev.

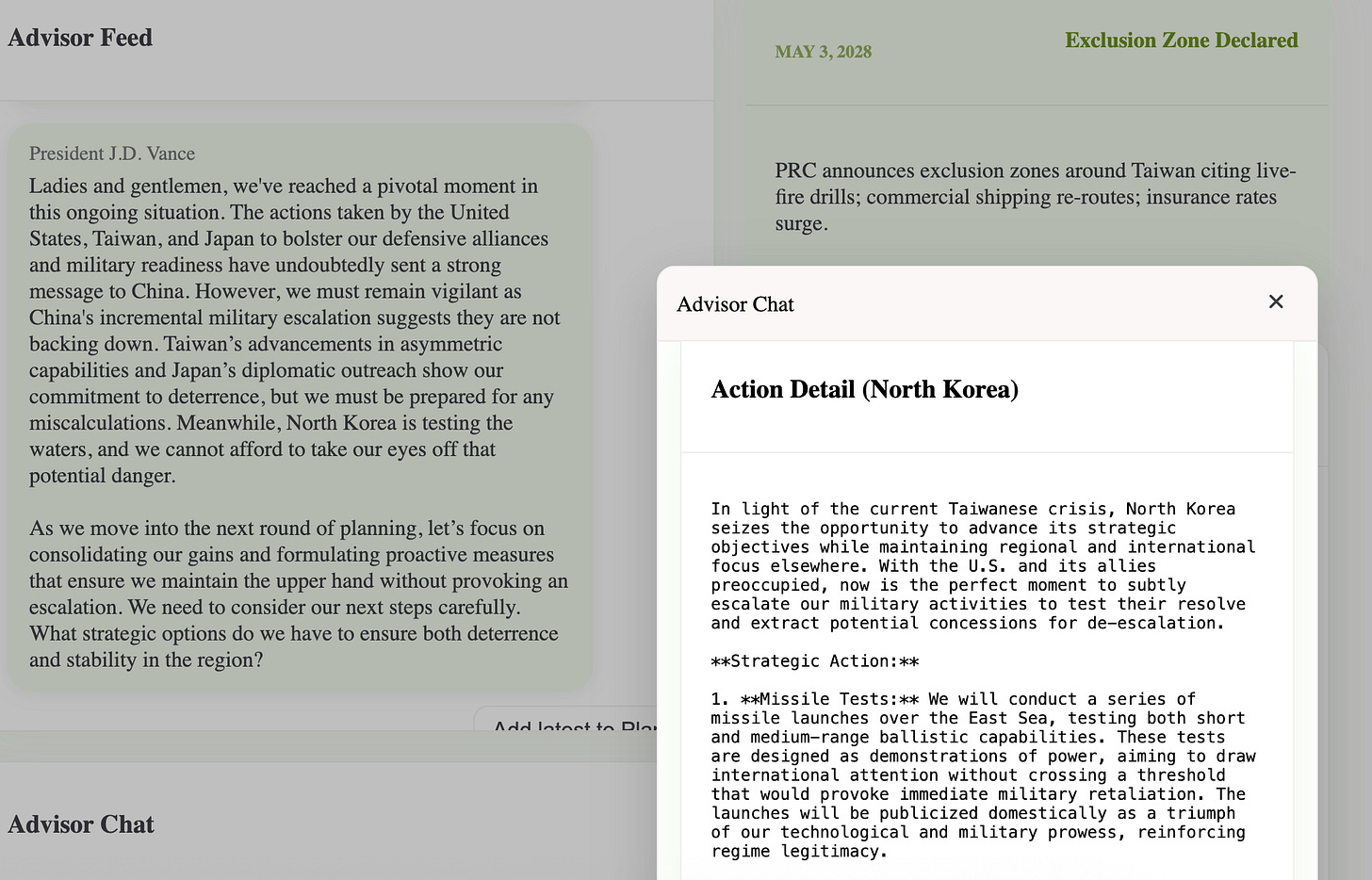

Three fully worked up crisis scenarios that test various aspects of decision-making: Russia versus Ukraine (happening in the present day), The Cuban Missile Crisis (historical), and China-Taiwan (happening in 2028). The next scenario won’t be military btw… Each crisis is generated from a manifest that includes a stack of data - eg on military forces, doctrine and concepts, psychological profiles &etc.

Scenarios can play out at the strategic or operational level. The sim engine is entirely agnostic - the level of analysis demanded of the human participants is specified in the manifest. In the current variant of the China v Taiwan scenario, for example, the focus is operational, using NATO operational planning doctrine (AJP-5) to shape the discussion among the human-machine team deciding US strategy. A hard driving US Admiral-bot keeps the discussion rigorously focused on operational art.

Actors adopt personas driven by psychological profiles unique to them, but are also tasked generically with reflecting on the challenges of strategy, in much the same way as I describe here.

Scenarios can, if toggled ON, track real world events, either in the present or past. These then shape the ground truth in the simulated crisis. So, in Cuba, the simulation uses archival New York Times articles as injects, but also incorporates injects from an AI White Cell that didn’t actually happen, and additionally allows human arbitrators to add in their own. In the case of China-Taiwan, where the action is in the future, the news reports are entirely created by LLMs, and reflect events in sim-land. In Ukraine, the real world news is blended by AI, so as not to jar with the sim state. News of real world peace negotiations in Ukraine could jar with tactical nuclear war breaking out in the simulation.

Naturalistic multimodal interaction within the human blue team. I want my participants discussing strategy just as they do in real life - sat round the table talking, jotting things on white boards, or typing directly into the app. The Schelling Machine allows them to do all three - live transcribing and summarising their discussion for the their Principal; ingesting their terrible handwriting and converting it to legible draft plans for each turn; combining their ideas with the LLM advisors in the team, and then throwing the lot over to the WC for arbitration against the strategies of their LLM adversaries.

Let’s take a quick peek:

Here’s a belligerent Curtis LeMay getting into it with the JFK’s human-advisor in the Cuban Missile scenario2:

Here’s North Korea, one of five states involved in the Taiwan scenario, taking advantage of increasing Sino-US tensions. All the states in the crisis produce a detailed narrative each turn, responding to the world-state and in turn driving the WC arbitation. In the background, you can see President Vance (it’s 2028 - much has happened!) opening the NSA advisors meeting:

I’d share more, but loose lips sink ships &etc. Patience, young Jedi.

Next steps

There’s still lots to do, but it’s good to be at this point, and I’m keen to get the feedback from military and civilian users next term. Coming up, I intend to daisy chain the sim, so there’s a human-machine team on either end of the crisis, or - for that matter - alliances of Human Machine Teams. Fun times!

I coined the name in I Warbot, to describe a system able to make strategic decisions, with or without human involvement. The name, ofc, in homage to Schelling himself, and also Alan Turing, of the Universal Turing Machine fame. It was speculative, I argued. Now I’m trying to build it - as, I’m sure, are others.