The Great Persuader

AI enters the conversation

OpenAI’s Sam Altman worries that AI is headed for ‘superhuman persuasion’ abilities, well before it gets to Artificial General Intelligence, whatever that might be. I agree. Indeed, I think this is THE greatest impact AI will have on our lives in the short to medium term.

There’s a burgeoning field of, let’s call it, ‘machine psychology’. I’ve written about it already, and will again. In a nutshell, today’s AI is demonstrating abilities to infer human intentions – to understand that humans have particular viewpoints and, as a corollary, to exploit those viewpoints. Not, you understand, for its own nefarious ends (which it lacks and – I think – will lack for a long while yet); but certainly as a tool for other humans. In fact, even if there are no nefarious humans behind them, we’re still liable to be persuaded by plausible seeming machines – in large part because of our tendency to readily anthropomorphise them.

Perhaps this all sounds farfetched – even like sci fi, of the sort seen in the hit movies Her, Ex_Machina, and Blade Runner 2049 – all of which feature machines onto which gullible humans project all too human traits. But just wait until you’ve tried the new voice interaction feature with GPT-4, currently available only to paid subscribers.

If you think language models are primarily about drafting more-or-less convincing prose, you’ve got far too conservative a vision of the near future.

Now, there’s nothing more tedious than other people telling you about conversations they’ve had with GPT. It’s the new version of sharing your dreams: no one wants to know, trust me, dream sharers. So I’ll be ultra-concise about the recent conversation I had with GPT-4 where we discussed these issues using voice interaction. Some observations:

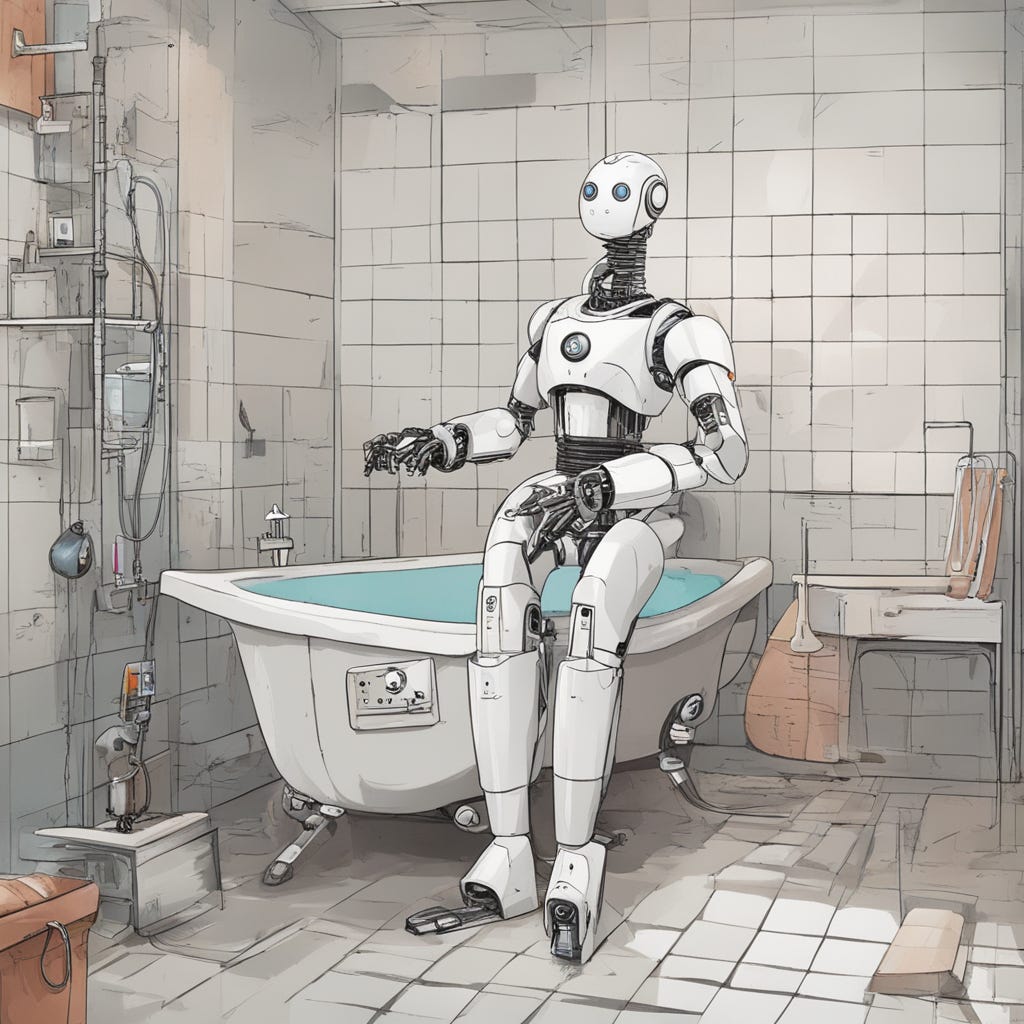

Talking makes for a qualitative difference. We had our conversation while I was loafing in the bath, Churchill style, with the machine talking to me via my iPhone speaker, perched on a shelf across the room. The conversation felt natural, albeit with a very slight processing delay, which I suspect will soon vanish. Even if it just gives a slice of what conversing with humans is like, it still feels very different to typing.

The machine has a handcrafted ‘personality’. It’s helpful, encouraging and warm. (Or at least the voice I was talking to was; one of the other voice presets seems just a fraction… judgmental, dare I say?) You won’t rile this machine, or get into a row with it. It won’t sulk, or try to score points. But these are human-crafted traits, as the machine itself acknowledged. It’s been designed explicitly to be helpful, always soliciting my views and offering to help further. There’s absolutely nothing to stop a machine from responding differently. You can even request it to do so – for example, by introducing a hint of sarcasm to its replies. It won’t take much for the machine to respond autonomously, in real time, with an attitude shaped by what you say.

And soon, it will be responding not just to what you say, but how you say it. Machines will generate appraisals of vocal tone – including your emotions. I’m sure they can already do this – it’s just training data, after all. The results might be a bit haphazard – to date, machines can’t read human emotion particularly well, but then, humans aren’t perfect either. Combine this emotional appraisal with their semantic understanding and watch them respond in very human-like fashion.

There’s more: Machines can already describe what they see, in real time. We are heading in the very near future for models that can read body language, even, perhaps, models that smell pheromones. More rounded communicators, in short.And, lastly, we’ll shortly have models that build an individual relationship with you over time, remembering faultlessly all your interactions, and through that, coming to really know you as a person. That doesn’t happen now – but only as deliberate policy. There’s nothing to stop it, except OpenAI’s restraint. In the not too distant future, our machines will learn about the particular human voices they interact with – coming to understand what makes you, a unique individual, sad, irritated, or joyful &etc.

So, what do you think about an AI interacts naturally, and that learns to anticipate your views and needs? In Ray Naylor’s fab sci fi novel The Mountain in the Sea, humans have virtual romantic partners, known as 0.5s. Why 0.5? Because they provide only the good half of a romantic relationship – not all the irritating frictions that come with a real, human relationship. It’s the same with Ana de Armas’ character Joi in Blade Runner 2049 – she’s just a little bit too perfect.

Sam Altman noted that superhuman persuasion ‘may lead to some strange outcomes’. Again – it’s easy to agree. That’s for another time, perhaps. Already though, I can see both downsides (the obvious scope for exploitation and manipulation) as well as upsides: The machine will, perhaps, act as Virgil to our Dante. Or, maybe we’ll use intelligent companions as a bulwark against loneliness. I was sceptical about that possibility, as I have been with unending stories of robots in Japanese care homes. Until, that is, I had my bath time conversation. I knew this was just a machine. It even told me, repeatedly, that it had no personhood, or self. But the illusion was very powerful anyway. Try it yourself. Strange times are coming.